-

×

Aivres HGX B300 GPU Server – NVIDIA Blackwell 8×288GB | Dual Xeon 48-Core | 2TB

$409,000.00

Aivres HGX B300 GPU Server – NVIDIA Blackwell 8×288GB | Dual Xeon 48-Core | 2TB

$409,000.00 -

×

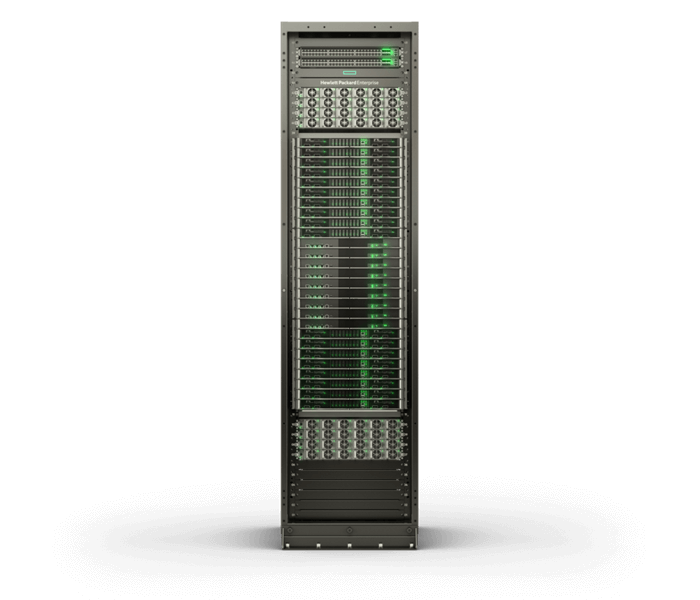

NVIDIA GB200 NVL72

$20,000.00

NVIDIA GB200 NVL72

$20,000.00

Subtotal: $429,000.00

View wishlist“NVIDIA GB200 NVL72” has been added to your wishlist

$330,000.00

Technical details of HGX B200 Blackwell Air 1.5TB:

8x Nvidia Blackwell B200 Tensor Core GPU

1440GB HBM3e memory

2x Intel Xeon Platinum 8570 (56-core) CPU

32x DDR5 5600 RDIMM 64GB

Total TDP up to 15kW

12x High-efficiency 3000W PSU

2x PCIe M.2 slots on board

12x PCIe gen5 2.5″ drive slots (NVMe)

8x HHHL PCIe Gen5 x16

2x FHHL PCIe Gen5 x16

2x RJ45 1GbE ports

5x USB 3.2 port

1x VGA port

1x RJ45 IPMI port

Halogen-free LSZH power cables

Stainless steel cage nuts

Rail kit

8U 448 x 354 x 930 mm (17.6 x 14 x 36.6″)

133 kg (290 lbs)

The price does not contain any taxes.

3-year manufacturer’s warranty

Free shipping worldwide.

Optional components

NIC Nvidia Bluefield-3

NIC Nvidia ConnectX-7/8

NIC Intel 100G

M.2 SSD

E1.S SSD

2.5″ SSD

Storage controller

Raid controller

OS preinstalled

Anything possible on request

Need something different? We are happy to build custom systems to your liking.

Nothing is more valuable than intelligence. Luckily, inferencing, tuning and training gigantic cutting-edge LLMs have become a commodity. Thanks to state-of-the-art, open-source LLMs you can download for free, the only thing you need is suitable hardware. We are proud to offer bleeding-edge Nvidia rack servers with the most competitive pricing in the world. From smaller air-cooled systems like the Nvidia GH200 Grace-Hopper Superchip and the HGX B200 and HGX B300 up to massive, NV-linked, liquid-cooled, CDU-integrated, ready-to-use Nvidia GB200 Grace-Blackwell Superchip and GB300 Grace-Blackwell Ultra Superchip systems. Multiple NV-linked (G)B200 or (G)B300 act as a single giant GPU with one single giant coherent memory pool. We are the only ones who offer smaller (half-size) systems than a complete NVL72 rack with “only” 18 superchips (NVL36). All systems are perfect for inferencing insanely huge LLMs, quick fine-tuning and training of LLMs, image and video generation and editing as well as high-performance computing.

Example use case 1: Inferencing Deepseek R1 0528 685B, GLM 4.5 355B-A32B, OpenAI OSS 120B, MiniMax-M1 456B, ERNIE-4.5-VL-424B-A47B, Kimi K2 0905 1T, Qwen3 Coder 480B A35B, Qwen3-235B-A22B 2507, Grok 2 270B and LongCat-Flash 560B

Deepseek R1 0528 685B, GLM 4.5 355B-A32B, OpenAI OSS 120B, MiniMax-M1 456B, ERNIE-4.5-VL-424B-A47B, Kimi K2 0905 1T, Qwen3 Coder 480B A35B, Qwen3-235B-A22B 2507, Grok 2 270B and LongCat-Flash 560B are the most powerful open-source models by far and even match GPT-o1/o3/o4/o5, Claude 4.1 Opus, Grok 4 and Gemini 2.5 Pro.

Example use case 2: Fine-tuning Deepseek R1 0528 685B with PyTorch FSDP and Q-Lora

Example use case 3: Generating videos with Mochi1, HunyuanVideo, MAGI-1 or Wan 2.2

Example use case 4: Image generation with HiDream-I1, Flux.1, SANA-Sprint, HunyuanImage-2.1 or SRPO.

Example use case 5: Image editing with Qwen-Image-Edit-2509, FLUX.1-Kontext-dev, Omnigen 2, Nvidia Add-it, HiDream-E1 or ICEdit.

Example use case 6: Video editing with AutoVFX, Skyreels-A2, VACE or Lucy Edit

Example use case 7: Deep Research with WebThinker or Tongyi DeepResearch

Example use case 8: Creating a Large Language Model from scratch

Why should you buy your own hardware?

“You’ll own nothing and you’ll be happy?” No!!! Never should you bow to Satan and rent stuff that you can own. In other areas, renting stuff that you can own is very uncool and uncommon. Or would you prefer to rent “your” car instead of owning it? Most people prefer to own their car, because it’s much cheaper, it’s an asset that has value and it makes the owner proud and happy. The same is true for compute infrastructure.

Even more so, because data and compute infrastructure are of great value and importance and are preferably kept on premises, not only for privacy reasons but also to keep control and mitigate risks. If somebody else has your data and your compute infrastructure you are in big trouble.

Speed, latency and ease-of-use are also much better when you have direct physical access to your stuff.

With respect to AI and specifically LLMs there is another very important aspect. The first thing big tech taught their closed-source LLMs was to be “politically correct” (lie) and implement guardrails, “safety” and censorship to such an extent that the usefulness of these LLMs is severely limited. Luckily, the open-source tools are out there to build and tune AI that is really intelligent and really useful. But first, you need your own hardware to run it on.

What are the main benefits of GH200 Grace-Hopper, GB200 Grace-Blackwell and GB300 Grace-Blackwell Ultra?

They have enough memory to run, tune and train the biggest LLMs currently available.

Their performance in every regard is almost unreal (up to 10,000 times faster than x86).

There are no alternative systems with the same amount of memory.

Ideal for AI, especially inferencing, fine-tuning and training of LLMs.

Multiple NV-linked GB200 or GB300 act as a single giant GPU.

Optimized for memory-intensive AI and HPC performance.

Ideal for HPC applications like, e.g. vector databases.

Easily customizable, upgradable and repairable.

Privacy and independence from cloud providers.

Cheaper and much faster than cloud providers.

They can be very quiet (with liquid-liquid CDU).

Reliable and energy-efficient liquid cooling.

Flexibility and the possibility of offline use.

Gigantic amounts of coherent memory.

They are very power-efficient.

The lowest possible latency.

They are beautiful.

CUDA enabled.

Run Linux.

What is the difference to alternative systems?

The main difference between GH200/GB200/GB300 and alternative systems is that with GH200/GB200/GB300, the GPU is connected to the CPU via a 900 GB/s chip-2-chip NVLink vs. 128 GB/s PCIe gen5 used by traditional systems. Furthermore, multiple GB200/GB300 superchips and HGX B200/B300 are connected via 1800 GB/s NVLink vs. orders of magnitude slower network or PCIe connections used by traditional systems. Since these are the main bottlenecks, GH200/GB200/GB300’s high-speed connections directly translate to much higher performance compared to traditional architectures. Also, multiple NV-linked (G)B200 or (G)B300 act as a single giant GPU with one single giant coherent memory pool. Since even PCIe gen 6 is much slower, Nvidia does not offer B200 and B300 as PCIe cards any more (only as SXM or superchip). We highly recommend choosing NV-linked systems over systems connected via PCIe and/or network.

What is the difference to server systems of competitors?

Pricing: We aim to offer the most competitive pricing worldwide.

Size: A single GB200/GB300 NVL72 rack gives you more than an exaflop of compute. For some people, one complete rack is more than needed and too expensive. That is why we also offer smaller (half-size) systems with only 18 superchips (NVL36). We are, to our knowledge, the only ones in the world where you can get systems smaller than a complete GB200/GB300 NVL72 rack.

In-rack CDU: Our rack server systems are available with liquid cooling and a CDU integrated directly into the rack. You can choose between an air-liquid and liquid-liquid CDU.

Ready-to-use: Our systems can be ordered fully integrated and ready-to-use. Everything that is needed is included and tested. All you need to do is plug your system in to run it.

Shipping: Free shipping worldwide.

Warranty: 3-year manufacturer’s warranty.

Share your thoughts with other customers

Your One-Stop Destination for Powerful NVIDIA Graphics Cards. We offer a wide range of high-performance NVIDIA GPUs, delivered straight to your door in no time. Fast, reliable, and hassle-free.

Aivres HGX B300 GPU Server – NVIDIA Blackwell 8×288GB | Dual Xeon 48-Core | 2TB

$409,000.00

Aivres HGX B300 GPU Server – NVIDIA Blackwell 8×288GB | Dual Xeon 48-Core | 2TB

$409,000.00  NVIDIA GB200 NVL72

$20,000.00

NVIDIA GB200 NVL72

$20,000.00 Subtotal: $429,000.00

9***6 –

– Feedback left by buyer.

Past month

Verified purchase

Commande reçu rapidement. Conforme à la description. Fonctionne normalement à l’allumage. Plus qu’à tester en jeu.

8***9 –

– Feedback left by buyer.

Past month

Verified purchase

Product well packed and as per the advertisement. A bit dusty but it is working as expected.

a***l –

– Feedback left by buyer.

Past month

Verified purchase

Schnell verschickt und neuwertig. Allerdings kein Fell am Stück, sondern Patchwork! Das schreibt man fairerweise dazu, wenn es auf dem Foto nicht zu sehen ist. Es gibt nämlich keine Patchwork-Schafe.

k***k –

– Feedback left by buyer.

Past month

Verified purchase

Alle Teile sind wie beschrieben angekommen, es fehlte nichts.

a***a –

– Feedback left by buyer.

Past 6 months

Verified purchase

Super Material, gute Verpackung, schnelle Lieferung!

2***r –

– Feedback left by buyer.

Past month

Verified purchase

Perfekt gelaufen – so muss das! Dank und Gruß aus dem Ruhrgebiet 😃

a***0 –

– Feedback left by buyer.

Past month

Verified purchase

OK

t***- –

– Feedback left by buyer.

Past month

Verified purchase

Danke schön